Member-only story

How to Fine-tune Stable Diffusion using LoRA

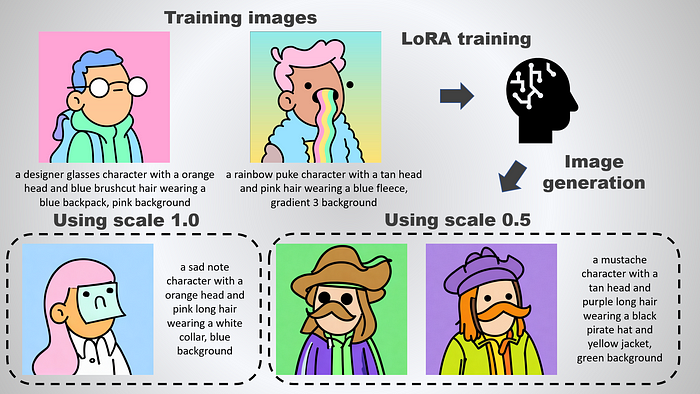

Personalized generated images with custom datasets

Previously, I have covered the following articles on fine-tuning the Stable Diffusion model to generate personalized images:

- How to Fine-tune Stable Diffusion using Textual Inversion

- How to Fine-tune Stable Diffusion using Dreambooth

- The Beginner’s Guide to Unconditional Image Generation Using Diffusers

By default, doing a full fledged fine-tuning requires about 24 to 30GB VRAM. However, with the introduction of Low-Rank Adaption of Large Language Models (LoRA), it is now possible to do fine-tuning with consumer GPUs.

Based on a local experiment, a single process training with batch size of 2 can be done on a single 12GB GPU (10GB without

xformers, 6GB withxformers).

LoRA offers the following benefits:

- less likely to have catastrophic forgetting as the previous pre-trained weights are kept frozen

- LoRA weights have fewer parameters than the original model and can be easily portable

- allow control to which extent the model is adapted toward new training images (supports interpolation)

This tutorial is strictly based on the diffusers package. Training and inference…